Non-linear Activations (weighted sum, nonlinearity)

| 激活函数 | 简介 | 图示 |

|---|---|---|

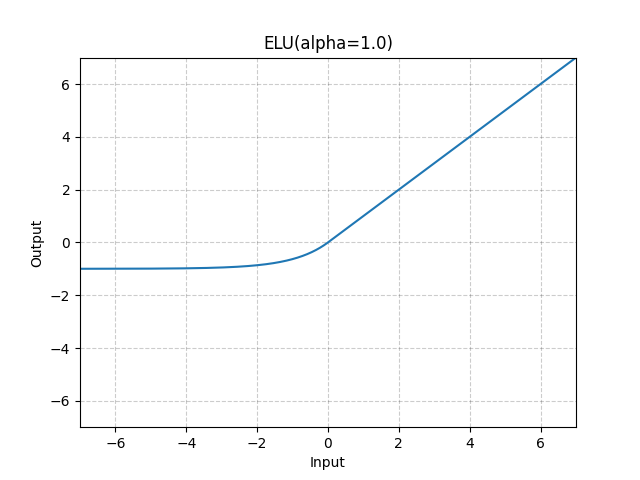

| nn.ELU | Exponential Linear Unit (ELU) function, element-wise. |  |

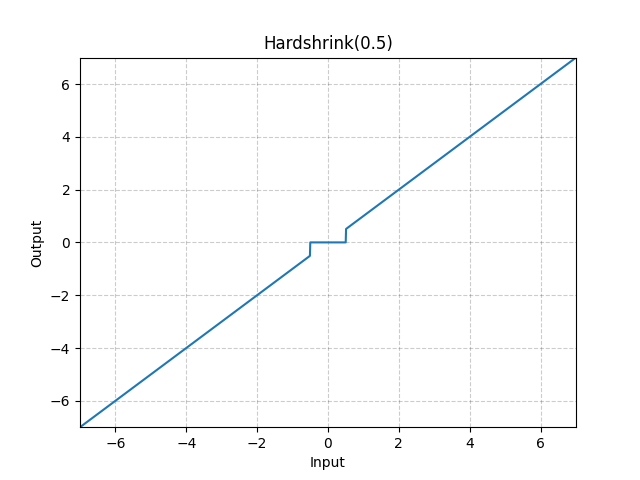

| nn.Hardshrink | Hard Shrinkage (Hardshrink) function element-wise. |  |

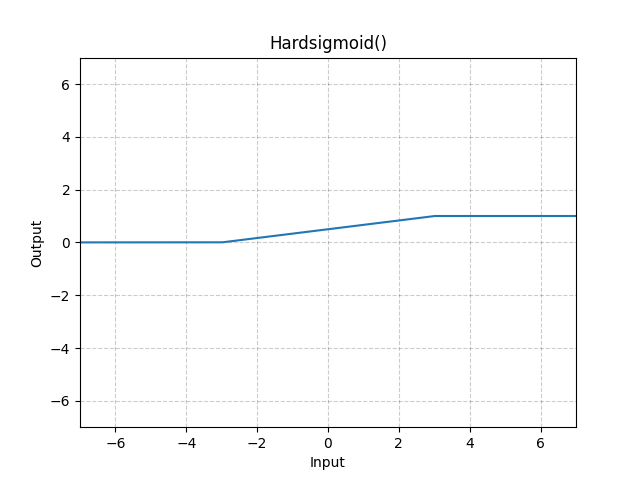

| nn.Hardsigmoid | Hardsigmoid function element-wise. |  |

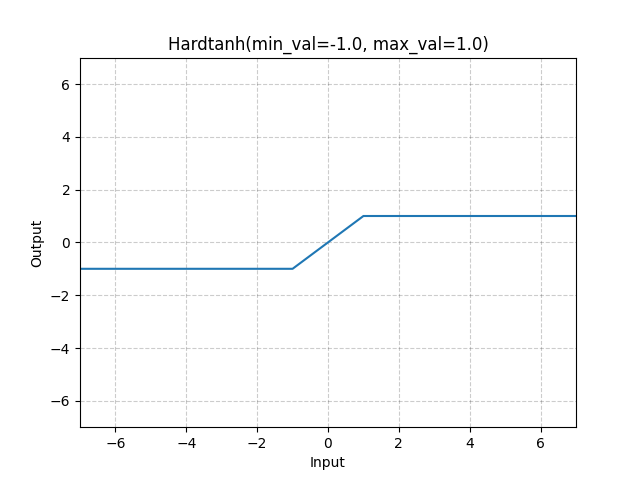

| nn.Hardtanh | HardTanh function element-wise. |  |

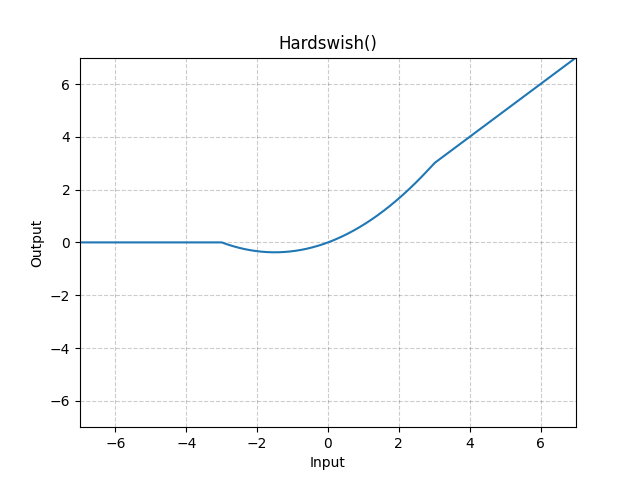

| nn.Hardswish | Hardswish function, element-wise. |  |

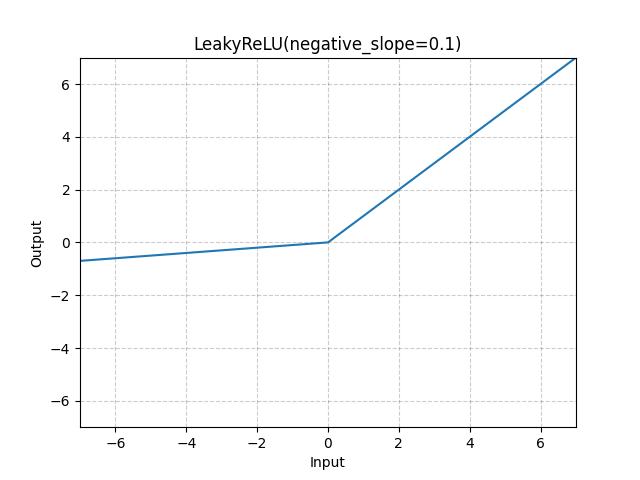

| nn.LeakyReLU | LeakyReLU function element-wise. |  |

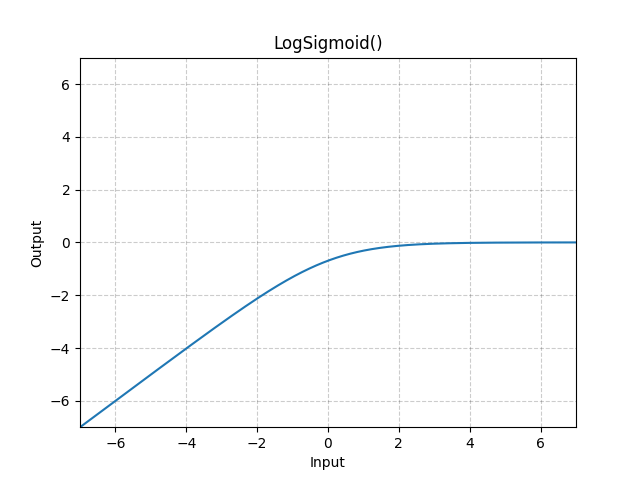

| nn.LogSigmoid | Logsigmoid function element-wise. |  |

| nn.MultiheadAttention | Allows the model to jointly attend to information from different representation subspaces. |  |

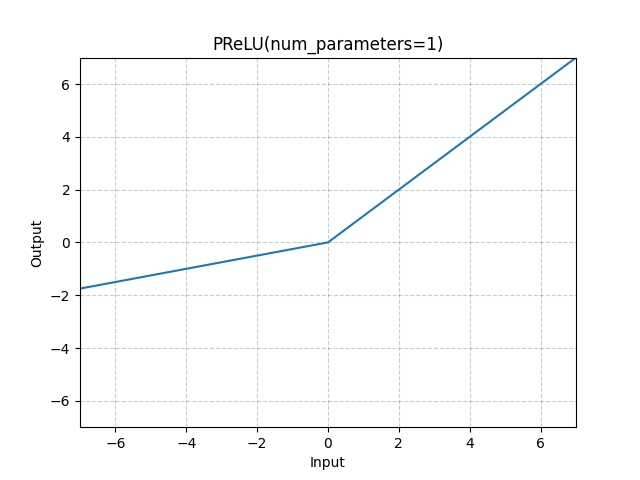

| nn.PReLU | element-wise PReLU function. |  |

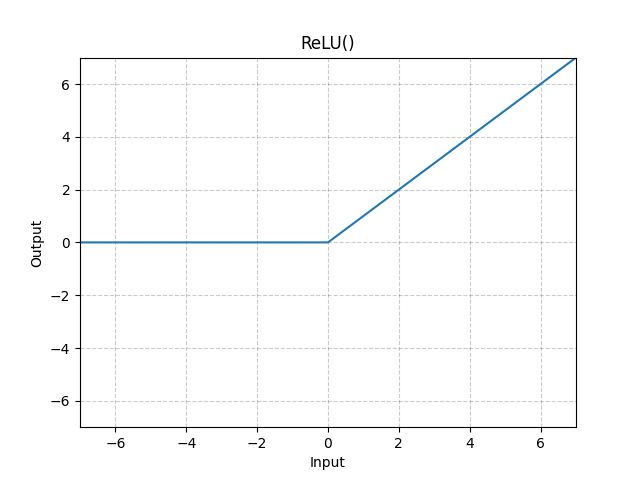

| nn.ReLU | rectified linear unit function element-wise. |  |

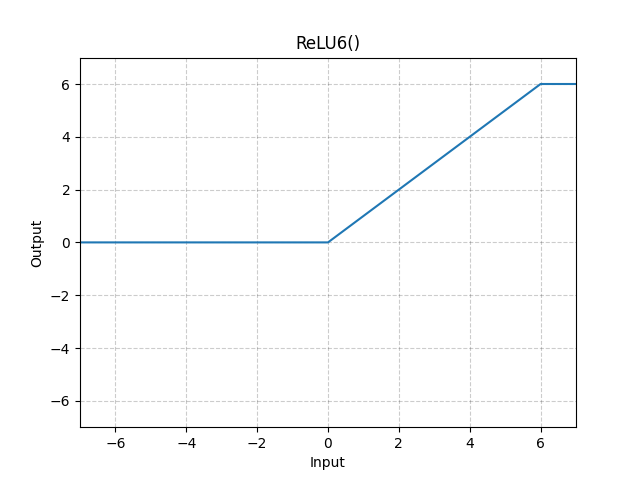

| nn.ReLU6 | ReLU6 function element-wise. |  |

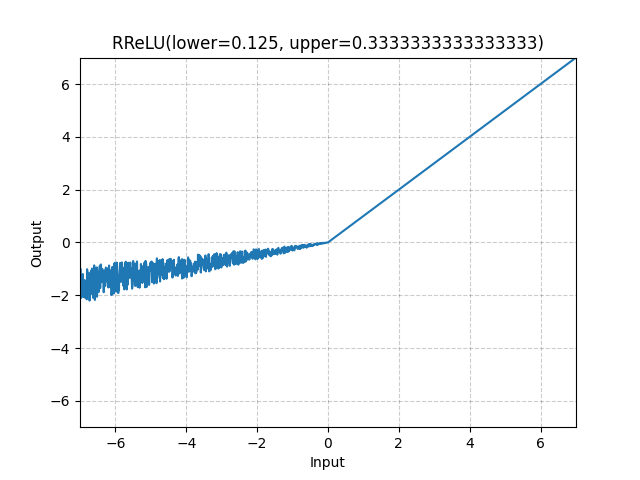

| nn.RReLU | randomized leaky rectified linear unit function, element-wise. |  |

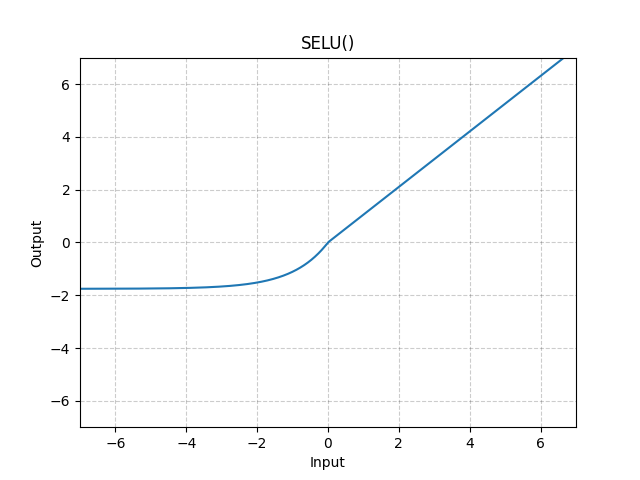

| nn.SELU | SELU function element-wise. |  |

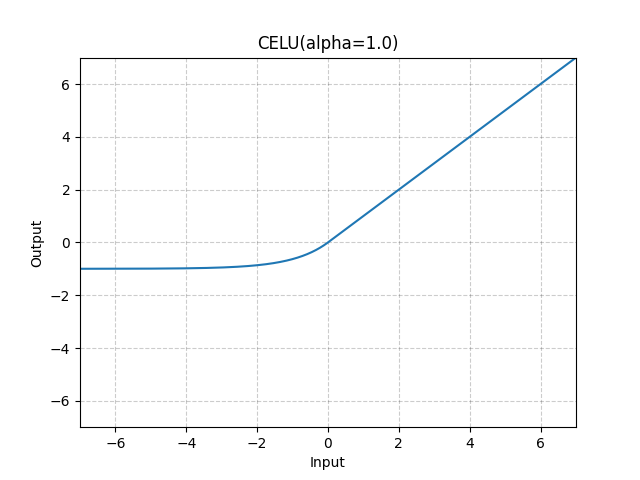

| nn.CELU | CELU function element-wise. |  |

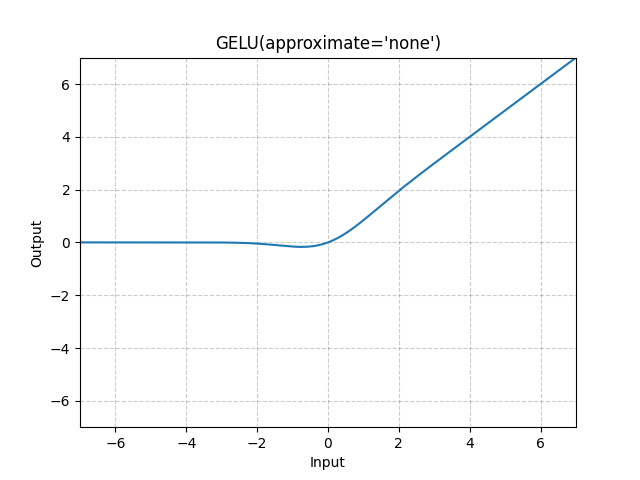

| nn.GELU | Gaussian Error Linear Units function. |  |

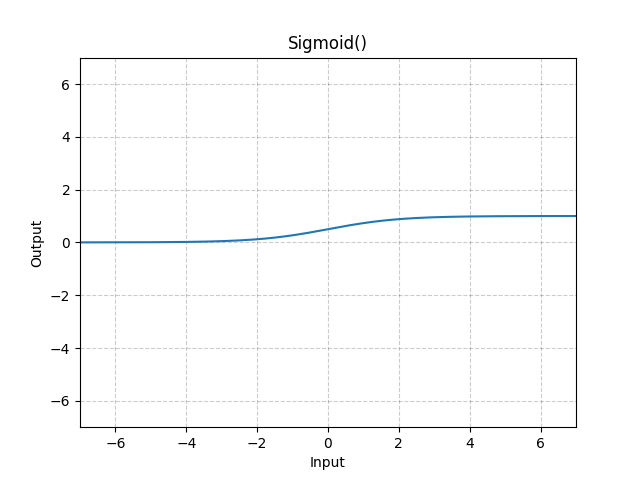

| nn.Sigmoid | Sigmoid function element-wise. |  |

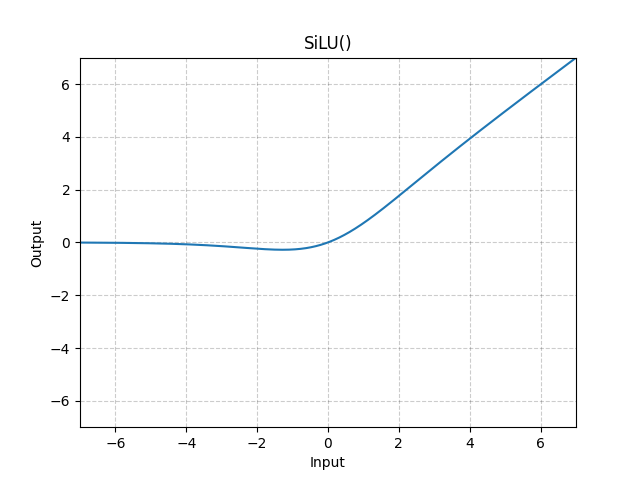

| nn.SiLU | Sigmoid Linear Unit (SiLU) function, element-wise. |  |

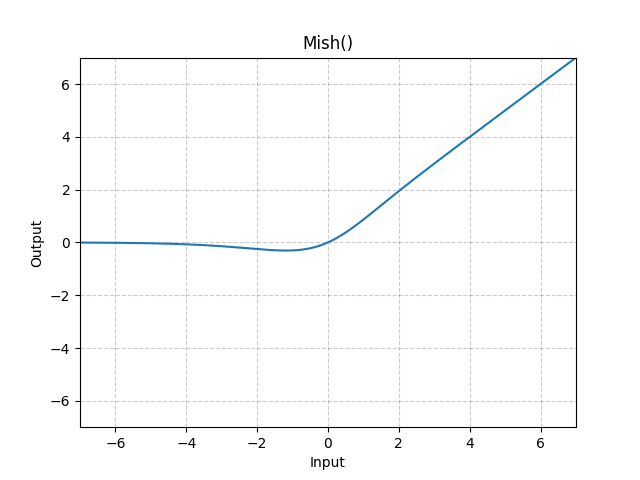

| nn.Mish | Mish function, element-wise. |  |

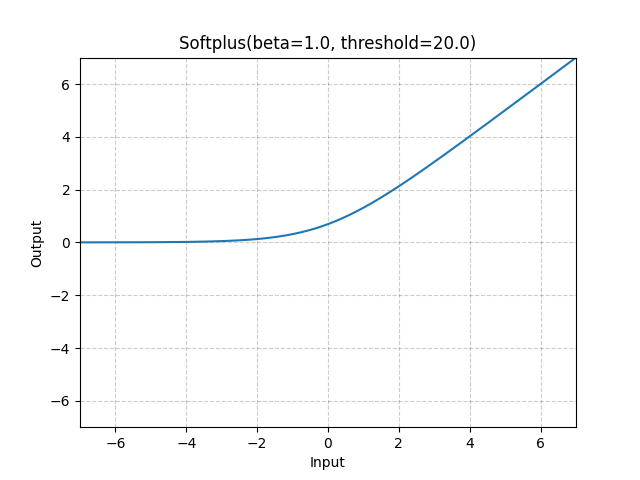

| nn.Softplus | Softplus function element-wise. |  |

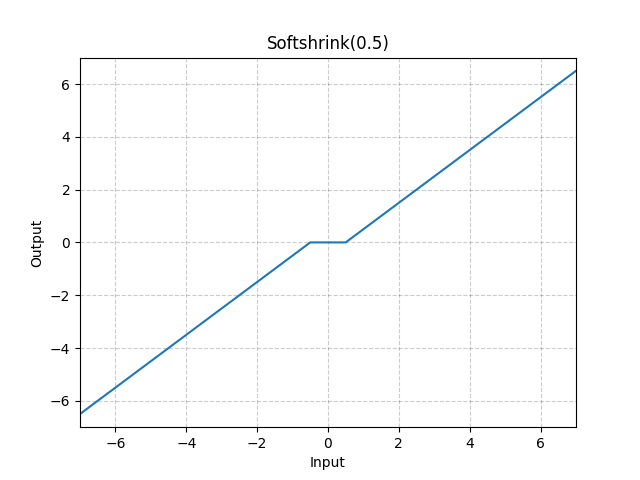

| nn.Softshrink | soft shrinkage function element-wise. |  |

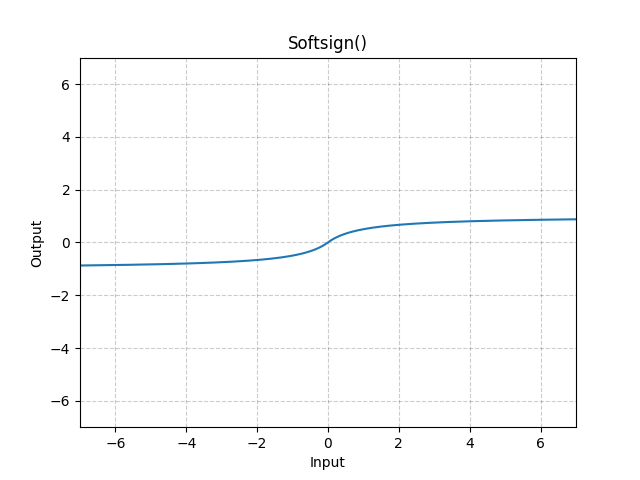

| nn.Softsign | element-wise Softsign function. |  |

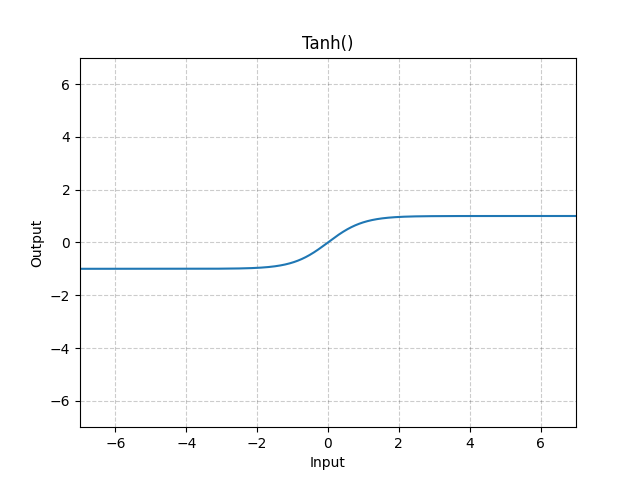

| nn.Tanh | Hyperbolic Tangent (Tanh) function element-wise. |  |

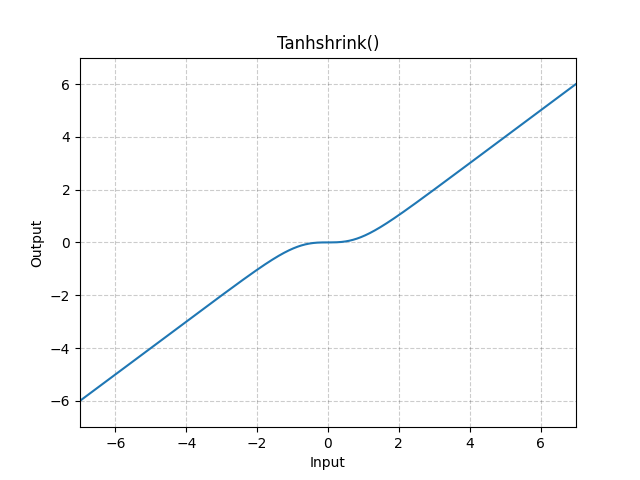

| nn.Tanhshrink | element-wise Tanhshrink function. |  |

| nn.Threshold | Thresholds each element of the input Tensor. | $\begin{cases} x & x >Threshold\ value & otherwise \end{cases}$ |

| nn.GLU | gated linear unit function. | 输入矩阵根据输入参数分割成两个子矩阵a和b,计算矩阵 a *b |

Non-linear Activations (other)

| 激活函数 | 简介 | 说明 |

|---|---|---|

| nn.Softmin | Applies the Softmin function to an n-dimensional input Tensor. | 重新缩放输入的n维张量,使输出 Tensor 的元素位于 [0,1] 范围内且总和为 1(每个dim指定方向的切片综合)。softmin 是单调递减(最小的数在经过了softmin后变成最大值) |

| nn.Softmax | Applies the Softmax function to an n-dimensional input Tensor. | 重新缩放输入的n维张量,使输出 Tensor 的元素位于 [0,1] 范围内且总和为 1(每个dim指定方向的切片综合)。softmax 是单调递增(操作会使得最大的值在激活操作后依然保持最大 |

| nn.Softmax2d | Applies SoftMax over features to each spatial location. | SoftMax 应用到每个空间位置的要素上。不再是dim指定的切片方向,而是整个输入张量 |

| nn.LogSoftmax | Applies the $log(Softmax(x)) $function to an n-dimensional input Tensor. | SoftMax 应用到输入的n维张量后,对结果再取$log$ |

| nn.AdaptiveLogSoftmaxWithLoss | Applies the $log(Softmax(x))$ function to an n-dimensional input Tensor. | 作为输入传递到该模块的标签应根据其频率进行排序,并在排序后根据指定的数目对输入的标签进行分簇处理。 |